Hello everyone! We are overwhelmed by the interest and support we received for our flagship CS-1 product announcement at Supercomputing last week. And we remain grateful to Argonne National Lab for being our first customer and sharing the story of both the workloads they are accelerating with the CS-1 and their datacenter deployment experience.

We had a fantastic turn out at Supercomputing – the booth was bustling all day, every day! We enjoyed hosting and answering questions from interested parties about the features of the CS-1, and the whys and hows of the engineering challenges overcome in building it.

-

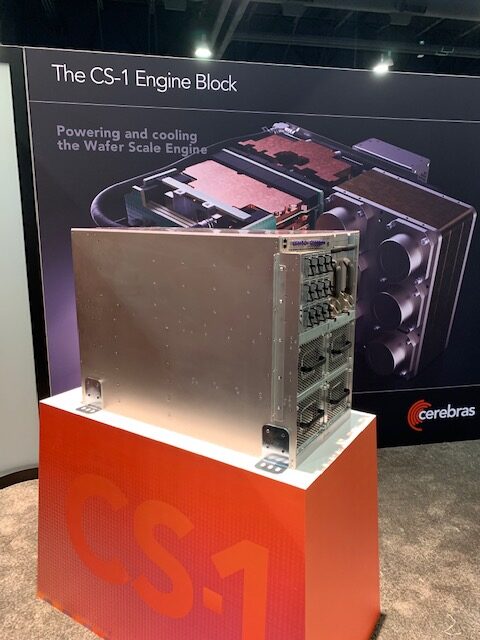

The CS-1 at our Supercomputing Booth -

Members of the Cerebras Team at our Supercomputing Booth

Our co-founder Jean-Philippe Fricker and I also relished the opportunity to deliver a technical talk on the lessons we learned when designing, engineering and deploying the CS-1.

The 4 key lessons we talked about were:

- Packaging – with the Wafer-Scale Engine (WSE) being the industry’s first-ever wafer-scale integrated chip, we found that traditional packaging techniques weren’t up to the task. Thermal expansion mismatch, power delivery and heat removal were critical problems to solve. And we did so by deploying a special connector between the PCB and WSE, fan-out I/Os on the edges of the wafer. Cross scribe-line die-to-die interconnect was a key innovation in allowing us to get to wafer-scale.

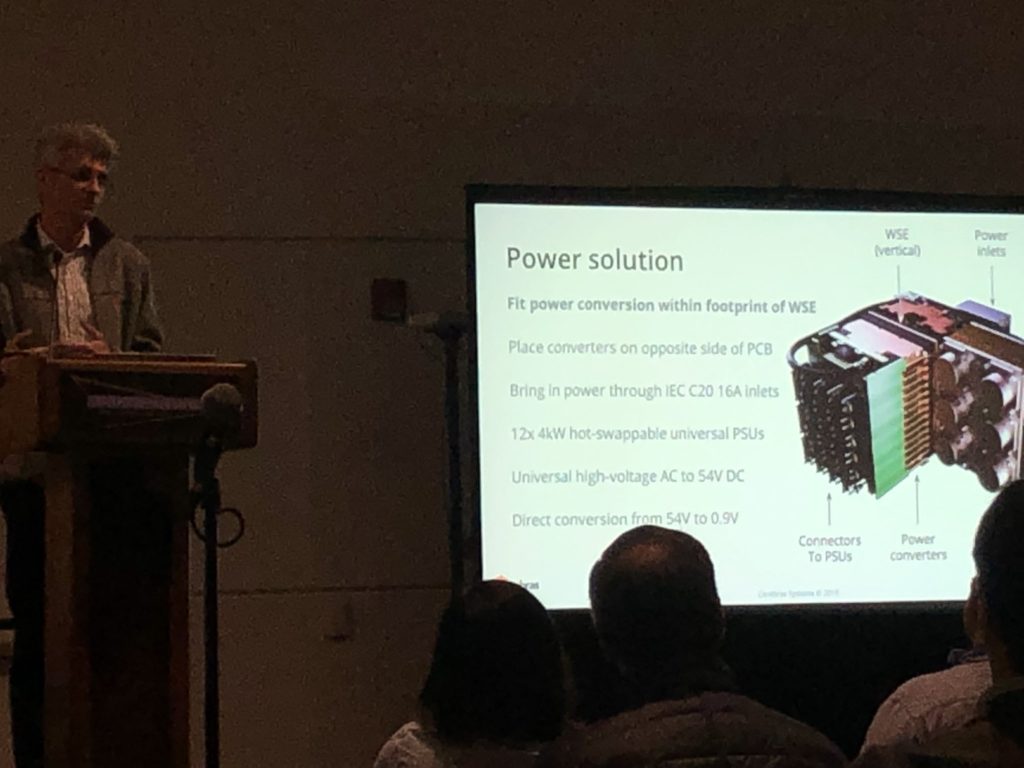

- Power Delivery – The WSE consumes 15kW of power at peak. While this sounds like a lot, on a per rack-unit basis, the power density is the same as that of a standard rack server CPU, or GPU. Still, solving for this was challenging because traditional methods such as delivering power from the periphery, power regulators and multi-stage power conversion topologies were not going to provide the necessary efficiency. We solved this by placing converters on the opposite side of the PCB, universal high-voltage AC to 54V DC, and direct conversion from 54V to 0.9V. And we did this with standard IEC C20 16A power inlets, and PSU redundancy built in!

- Cooling – Of course, cooling a 15kW chip is no joke either! Its large surface prevents the use of traditional cooling. We found phase change cooling methods to be impractical. The aforementioned thermal expansion mismatch between the PCB and wafer was crucial to solve for as well. The CS-1’s cooling solution utilizes a combination of internal, closed-loop water-based coolant, delivered by a set of hot-swappable redundant pumps, and a set of hot-swappable fans that blow cold air over a heat exchanger to cool the internal coolant. The result is that the CS-1 fits within any standard datacenter’s capabilities.

- Data I/O – The WSE’s compute resources require a constant stream of high-speed data. To be specific, an I/O bandwidth of 1.2Tbit/s is necessary to keep it busy. A single server can’t supply data fast enough to feed this rate. The CS-1 manages connections with an on-board local CPU. Data is ingested via 12x 100Gb Ethernet links hooked up to any standard datacenter switch, to on-board optical transceivers which terminate at an FPGA, which transfers this data to the WSE.

The result of overcoming all these challenges is that Cerebras was able to solve the problem of wafer-scale compute for the first time in the history of the chip design industry. And we did so while fitting within the capabilities of any datacenter. As such, the Cerebras CS-1 is the world’s fastest AI compute solution, a full system packaged with robust software that meets users where they are. It is the only comprehensive solution for the vast, and growing, compute requirements of the Deep Learning workload.

It was heartwarming to see a room full of our industry peers and answer their questions after the talk. We’ve since received several notes of praise for the talk and are truly appreciative. Take a look at our slides here.

My colleague Natalia Vassilieva and I also enjoyed participating in several Birds of a Feather sessions at SC. We enjoyed all our planned and unplanned meetings with past and future collaborators, and look forward to kicking off a successful year of partnerships and growth ahead.

All in all, it was a very successful outing for Cerebras! We would love to hear from you – please reach out if you have any questions or are interested in learning more about how the CS-1 suits your Deep Learning needs.

Related Posts

June 12, 2024

Cerebras Enables Faster Training of Industry’s Leading Largest AI Models

Collaboration with Dell Technologies Expands Cerebras’ AI Solutions and ML…